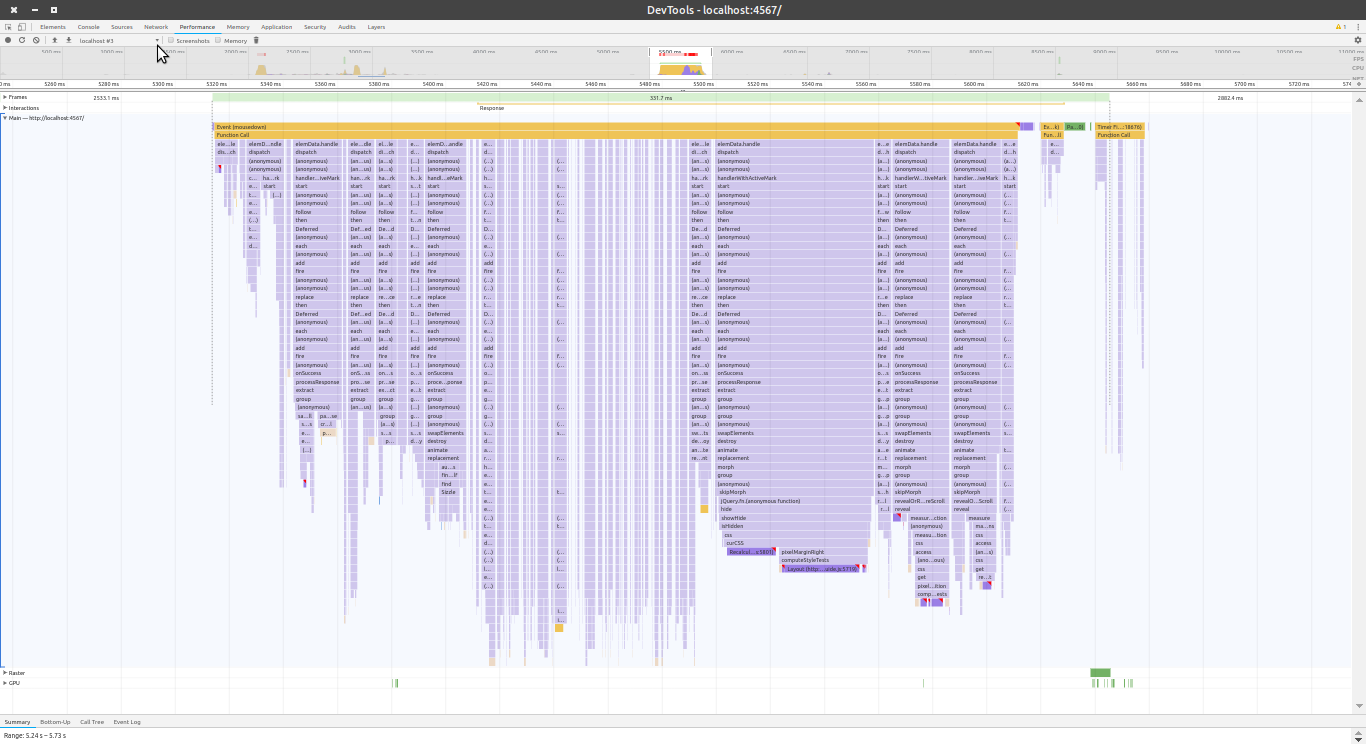

In July 2018 one of our hosting customers was confronted with slow PostgreSQL queries. Statements usually taking under 1 ms took multiple seconds. Our customer already found the slow statements and started to investigate the issue.

We jumped in later and started the usual procedure to analyse slow PostgreSQL statements, using EXPLAIN ANALYZE. The following output was shown for one of the problematic queries:

production database Primary

Aggregate (cost=32988.28..32988.29 rows=1 width=8) (actual time=2877.870..2877.870 rows=1 loops=1)

-> Index Only Scan using index_shipments_on_state on shipments (cost=0.43..32905.89 rows=32955 width=0) (actual time=0.048..2877.089 rows=6423 loops=1)

Index Cond: (state = ANY ('{init,waiting_for_manual_correction,unresolved,unassigned,price_not_calculatable,dispatching_unconfirmed,dispatching_confirmed,pending_check_out,dispatched,picked_up,undeliverable,passed_on}'::text[]))

Heap Fetches: 1339084

Planning time: 0.281 ms

Execution time: 2877.928 ms

(6 rows)

The problem did not occur when sourcing a production dump on local development machines. A restored PITR backup on our PITR host did not suffer from this problem either:

local database of customer

Aggregate (cost=24312.53..24312.54 rows=1 width=8) (actual time=6.948..6.948 rows=1 loops=1)

-> Index Only Scan using index_shipments_on_state on shipments (cost=0.43..24265.94 rows=18637 width=0) (actual time=0.031..5.988 rows=6733 loops=1)

Index Cond: (state = ANY ('{init,waiting_for_manual_correction,unresolved,unassigned,price_not_calculatable,dispatching_unconfirmed,dispatching_confirmed,pending_check_out,dispatched,picked_up,undeliverable,passed_on}'::text[]))

Heap Fetches: 6771

Planning time: 0.322 ms

Execution time: 6.999 ms

(6 rows)

We confirmed that the Index Only Scan was used – which should be fast. However, we also saw a high count of Heap Fetches on the production server. This is usually a bad sign and explains why the query was slow. To understand why, we need to understand a few details about how PostgreSQL stores data and handles Index Only Scans. Here's a simple explanation:

Let's imagine a transaction running a read only query on a table. The query runs for a very long time and will return multiple rows of data. If another transaction needs to update rows which are to be returned by the running read query, we don't want to to wait for the read query to finish. On the other hand, we don't want our read query to return inconsistent data. MVCC solves this problem:

- To return a consistent state of our database, the running query sees only a snapshot from the data.

- If a row gets updated, a new row is created with the updated data.

- Now there are two row versions, an old one and a new one.

- PostgreSQL keeps track of which row versions should be seen by which transactions.

- The old row versions gets marked as “valid until X”

- The new row version gets marked as “valid from X”

The read query still running fetches the old row versions while newer queries will get the new row versions. But there is a problem: If this process is continued indefinitely, our database size will grow endlessly. To prevent this, we have VACUUM. VACUUM regularly checks if there are row versions which can be removed. This is the case when there is no transaction left that needs these old row versions. So, when our read query finishes and we end our transaction, VACUUM will remove the old row versions which got updates by our second transaction (and therefore will be replaced). VACUUM doesn't need to check every row version (by the way, tuple in PostgreSQL terminology) every time it runs. It maintains a Visibility Map which (briefly) stores information about tuples visible to all current transactions and all transactions in the future. The map doesn't contain data for each tuple but for all tuples stored in a memory page. So if a memory page is checked by VACUUM for “dead” tuples and all of them are visible to all transactions, it flips the corresponding bit in the Visibility Map. The next time it runs, it doesn't need to check this page again. If an update or delete statement changes a tuple in this page, the bit is flipped back again and next time VACUUM runs, it will check the tuples in this page again. This is just a really short explanation of how this works. If you wish to learn more, we recommend reading the linked PostgreSQL documentation. Emanuel's blogpost about PostgreSQL query optimization can give you deeper understanding too.

What does all this have to do with slow Index Only Scans?

Let's run a query using an Index Only Scan which will return ALL matching row versions. Matching rows of the query must be checked if they should be seen by the current transaction (MVCC). Index Only Scans access the Visibility Map, which gets updated by VACUUM runs. PostgreSQL will check if the page where the returned row version is stored is visible to all active transactions. If it is, we can continue with the next matching row version. If it isn't, we must fetch the table heap (the main data storage area of the table) to check if this row version is visible for the current transaction. This is comparably slow. Thus, a high amount of heap fetches is bad. There are a few explanations for a constantly high (or growing) amount of heap fetches:

- more updates/deletes in the table than

auto VACUUM is capable of scanning / cleaning up

- no

VACUUM for that table

VACUUM is not working

We checked for the amount of updates and transactions to the table and didn't see any problematic behaviour. A simple test run of VACUUM on the table showed that it did not change anything about the heap fetches. We also checked via SELECT schemaname,relname,last_autovacuum,last_autoanalyze,autovacuum_count,autoanalyze_count FROM pg_stat_all_tables; if auto VACUUM is executed for the affected table. Finally, a VACUUM VERBOSE lead us to the root of the issue:

...

DETAIL: 1349534 dead row versions cannot be removed yet.

...

This means that there were 1349534 old row versions but VACUUM refused to remove them because it thinks they're still needed. The visibility map gets no updates for these rows because these row versions needs to be checked in the next VACUUM run again. This means that our Index Only Scan can not benefit from fast visibility map lookups.

We needed to find out the reason why these row versions were not deleted by VACUUM. One reason can be a long running transaction (either active or idle) which still requires very old row versions. So we searched for active or idle transactions:

SELECT pid, usename, application_name, state, xact_start, query from pg_stat_activity where xact_start is not null;

Of course the query returned some transactions but they were just a few seconds old and disappeared shortly afterwards. We did not fully trust this and started digging around with locks, searched for broken VM files and searched again for transactions with old xmin values:

SELECT pid, datname, usename, state, backend_xmin FROM pg_stat_activity WHERE backend_xmin IS NOT NULL ORDER BY age(backend_xmin) DESC;

This took a lot of time but it made apparent that the Replica may be responsible for the issue (even though the problem appeared on the Primary):

Our customer was using the Replica not just for failover scenarios but also to execute expensive read queries on it. These queries ran a long time, so they got canceled when the VACUUM instructions replicated from the Primary to the Replica. The PostgreSQL binary replication works sequentially, i.e. newer changes will be applied only if the changes that came before have been applied successfully. Since we're dealing with binary replication and the Replica needs to represent the same state as the Primary, all changes including the clean-up initiated by VACUUM must be replicated. If a transaction on the Replica uses old row versions which are already deleted on the Primary, it will cause replication conflicts.

By default, PostgreSQL will wait a short time for transactions on the Replica to finish so that it can apply the VACUUM changes afterwards. However, to prevent a long replication lag, it will kill the transaction on the Replica after a configured time period. The parameter name is max_standby_streaming_delay, its default is 30 seconds. We could have increased the time-out value to satisfy the time needed for the queries on the Replica, but as this Replicas main purpose was to be an automatic failover instance this wouldn't be a good idea.

There is an alternative: We decided to enable hot_standby_feedback to prevent this. Put briefly, this parameter enables the PostgreSQL Replica to tell the Primary which row versions are still needed by transactions running on the Replica. We gave it even a bit more disk usage because VACUUM would sometimes be unable to clean-up some old row versions.

With the suspicion that the Replica caused the root of the issue, we checked for long running transactions on the Replica. We did not find any. We even stopped the Replica but the issue still persisted. Just to be sure, we disabled hot_standby_feedback, started a VACUUM VERBOSE and saw that the old row versions got cleaned up.

The query execution time was fine again:

QUERY PLAN

-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- --

Aggregate (cost=332.62..332.63 rows=1 width=8) (actual time=3.351..3.351 rows=1 loops=1)

-> Index Only Scan using index_shipments_on_state on shipments (cost=0.43..316.34 rows=6509 width=0) (actual time=0.046..2.453 rows=6411 loops=1)

Index Cond: (state = ANY ('{init,waiting_for_manual_correction,unresolved,unassigned,price_not_calculatable,dispatching_unconfirmed,dispatching_confirmed,pending_check_out,dispatched,picked_up,undeliverable,passed_on}'::text[]))

Heap Fetches: 476

Planning time: 0.271 ms

Execution time: 3.405 ms

(6 rows)

The question, why weeks/months old dead rows were kept, still remains. If hot_standby_feedback is enabled, the Replica will regularly tell the Primary which transaction ID is the oldest one needed. If there is no transaction on the Replica needing any old row versions, the Replica won't tell the Primary what it needs. It's hard to verify our suspicion after the fact, but it's likely that there was a dead replication slot as described here. However, since we can't check that after disabling hot_standby_feedback, there is no way to be sure. At the moment there is no other Replication Slot on the Primary than the active one for hot standby (Replica). Possibly the slot got removed when disabling hot_standby_feedback. Otherwise we have no explanation for the behaviour of hot_standby_feedback.

Conclusion

We failed to notice hot_standby_feedback at an early point of debugging and searched for transactions from clients instead of an issue caused by replication configuration. Even though the parameter shouldn't behave like this, we should have taken it into account at an earlier stage of debugging (e.g. after seeing that there were no long running transactions on the Primary).